The Symptoms

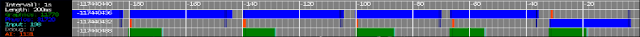

Most of the time I tested my game on my PC because I simply had no Xbox available. Now that I have one, I tested my game on the Xbox and had really bad results. It ran only at average 40-50 frames per second. So I looked at my scheduling visualizer to search for the bottleneck (see

this blogpost from march for a description of the visualizer):

|

| Task execution times Xbox 360 |

In the test scene there were ca. ten missiles and 15 space-crafts (enemies + own ships) and the framerate was down to ca. 35 fps. Very bad! The bottleneck was the physics task (blue bar). It took average 31ms per frame. The graphics task (green bar) had always to wait for the physics task. So I had to search there for my problem.

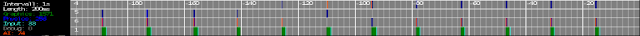

And just for fun the same scene on my PC (Intel Quadcore 2.5 GHz + Radeon HD 6700):

|

| Task execution times PC (Intel Quadcore 2.5 GHz + Radeon HD 6700) |

You can see, there is absolutely no performance problem on the PC! Further you can see that the

Xenon CPU used in the Xbox is really really slow. The physics calculations take over a hundred times longer. Ok, its a unfair comparison, the Xenon is from the year 2005 and my Core 2 Quad Q9300 is from 2008. Additionally there must be some other problems. Factor 100 is just too much. This can't be all reduced to the hardware. Maybe some specific Xbox .Net compact framework problems.

I learned from this, that you have to test all the time on the hardware your game is targeted for.

The Problem

Searching for the problem I had a closer look at the

Jitter Physics source code for the first time. The engines debug timers showed that the engine spent 95% of the time in the collision detection system, which uses the

Sweep and Prune algorithm. A very good description of this algorithm and its implementation can be found on the

Jitter homepage. The core idea behind this and most other collision detection algorithms is, to figure out which of the possible collisions can actually happen and put them into a list. This step is called the broadphase. In the next step (the narrowphase), only those object pairs in the list are actually tested for collision, using a more complex collision check which also calculates things like the collision points.

After reading the article about Sweep and Prune I had an idea what the reason for my performance problems could be.

I've put the perception for AI (also used for the player's radar) in the physics system. Perception means, figuring out which other game entities the AI can see right now. So every physics object carried a sensor collision shape around to "see" surrounding objects. Every game entity inside the shape can be seen by the AI. This is illustrated in the picture below:

I have drawn circles into the radar on the screenshot to show the approximated shape of the sensor. Doing perception inside the collision system doubled the number of objects that need a broadphase collision check. Also there are many completely useless broadphase collision checks between two sensors. Putting the perception into the physics systems was just a very bad idea! But the main CPU cycle wastage came from gathering perception data in EVERY FRAME. Thats just not necessary.

The Solution

The solution is easy. Move the perception gathering out of the collision system and don't gather every frame. It took me a few hours to move perception into the AI system. Have a look at the nice result:

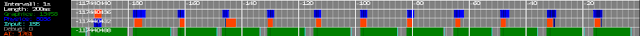

|

| Good Performance (Xbox): Perception in AI system, gathering interval one second |

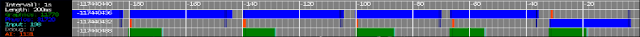

|

| For comparison - Bad Performance (Xbox): Perception in physics system |

Now I have frame rates between 70 and 80 and all tasks have to wait for the graphics system. This means I have now plenty of CPU cycles left to increase the number of enemies, implement new cool weapons or improve my AI.